This post describes grit, which is an vaporware alternative interface to git (grit does not exist).

There are a few alternative interfaces to git, but they typically make the same mistake: they reason that git is complex because it supports many workflows that are only rarely useful, while 10% of the functionality would fill the needs of 90% of the uses. Git’s separation of mechanism and policy is confusing. They then conclude that, therefore, a more opinionated tool with built-in support for a small number of common workflows would be better.

I think this throws out the baby with the bathwater: git’s flexibility is its strength, its problems are rather that the user interface is awful. These are conceptually simpler problems. If you build a less powerful version, this also ensure that the system is not fully compatible with git and, therefore, I cannot recommend it as “better git” to students

Here, I describe an alternative, in the form of an imaginary tool, called grit (named for the most important quality in a student learning git). Grit could be just an alternative interface to a git repository that is completely, 100% compatible, enabling everything that git already does. In fact, it adds one important piece of extra complexity to git’s model.

Here is what is (a part of what) wrong with git and how grit fixes them:

1. The git subcommand names are a mess

There has been progress on this front with the introduction of git switch and friends, which fixes the worse offender (the overloading of git checkout to mean a million different operations), but it’s still a mess.

Grit uses a multi-subcommand interface. For example:

grit branch creategrit branch listgrit branch deleteand, if there are unmerged commits:grit branch delete --forcegrit branch rename- and so on… In particular,

grit branchprints a help message.

Deleting a remote branch in grit is grit branch delete-remote origin/mytopic (as opposed to git push origin :mytopic — seriously, who can ever discover that interface?). Can you guess what grit tag delete-remote does?

Git has the concept of porcelain vs. plumbing, which is actually a pretty great concept, but packaged very badly. This is the whole theme of this post: git-the-concept is very good, git-the-command-line-tool is very bad (or, using the convention that normal font git refers to the concept, while monospaced git refers to the tool: git is good, but git is bad).

In grit, all the plumbing commands are a subcommand of internal:

grit internal rev-listgrit internal ls-remote- …

Everything is still there, but casual users clearly see that this is not for them.

2. Grit has a grit undo subcommand

Not only is the perhaps the number one issue that people ask about git, it is absurd that it is so for a version control system! Fast and easy undo is a major selling point of version control, but with git, undoing an action takes some magical combination of git reflog/git reset and despair. In grit, it’s:

grit undo

That’s it! If the working space has not been changed since the last grit command, then it brings it (and internal git status) back to where it was before you used the command. grit anything-at-all && grit undo is always a no-op.

This requires a new concept (similar to stash) in git to store the undo history, but is such an obvious sore point that it’s worth the extra complexity.

Technicalities: Using the option --no-undo means that the command should not generate an undo history entry. While keeping track of undo history is often cheap, there are a few exceptions. For example, grit branch delete-remote requires one to fetch the remote branch to be able to undo its deletion later and --no-undo skips that step for speed. If commits would be lost, the user is prompted for confirmation (unless --force is used, in which case, the branch is deleted, promptly, forcefully, and forever).

3. Grit has no multi-command wizards by default

Wizards are typically associated with graphical interfaces: a series of of menus where the user inputs all the information needed for a complex task.

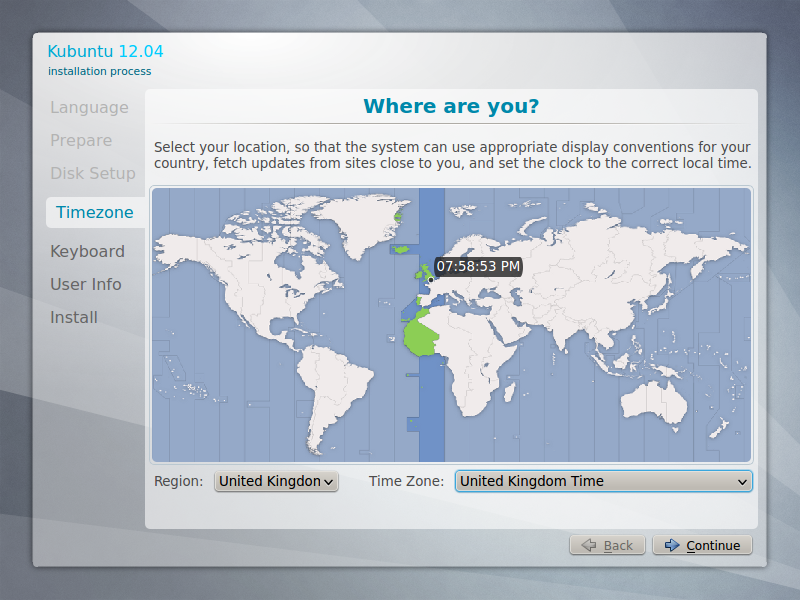

Amazon’s checkout wizard is one many of us use regularly, but here is an example from the Wikipedia page on Wizards:

On the command-line there are two possibilities of how to build wizards: (i) you open a command line dialog (do you want to continue? [Y/n] and so on) or (ii) you require multiple command invocations and keep state between them. Regular git has both of these, but it prefers to use (ii), which is the most complicated.

For an example of (ii): if you rebase and there is a conflict, it will drop you into the shell, expect you to fix the conflict, require a few git add operations, then git rebase --continue. Many git commands take --continue, which is a sure sign of a wizard.

For an example of (i), you can use git add -p: it will query you on the different chunks, and at the end execute the operation. Git add -p is actually great in that even if you have already accepted some chunks, if you use CTRL-C to quit, it will cancel the whole operation.

grit also has both, but prefers to use (i) whenever possible. If there is a conflict, it will start an interface similar to the existing git add -p and work chunk-by-chunk. It can start a subshell if you need more time or leave the state in a suspended animation (like happens now with git), but that is not the default. If you abort the operation (CTRL-C, for example), it cancels everything and leaves you in same situation as before you started the operation.

Arguably, the use of the index (staging area) can be seen as a form of a having a commit wizard, but it’s so fundamental to git that grit keeps it.

4. Grit performs no destructive actions without the --force flag!

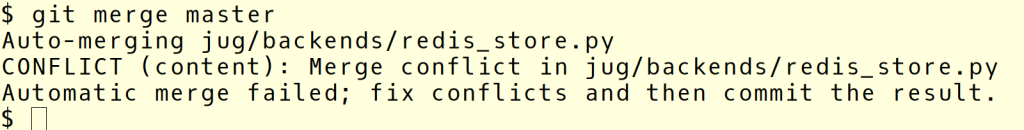

With git, it’s impossible to know whether a command will destroy anything. For example, when merging, it may or may not work:

If there is a conflict, it will clobber your files with those awful <<<<< lines!

This happens with a lot of git commands: git checkout may or may not overwrite your changes (causing them to be thrown away). If fact, anything that causes a merge may lead to the conflict situation.

With grit, if something is non-trivial to merge or will potentially destroy an existing file, it will either (1) refuse to do it or require confirmation or (2) open a wizard immediately. For example, merge conflicts result in a wizard being called to merge them. If you want the old-school clobbering, you can always choose the --conflict-to-file option (on the command line or in the wizard itself).

Final words: grit does not exist, so we cannot know whether git’s problems really are mostly at the surface or if a deeper redesign really is necessary. Maybe something like grit will be implemented, and it will turn out that it is still a usability nightmare. However, one needs to square the git circle: how did it win the version control wars when it so confusing? It was not on price and, particularly for the open-source world, it was not by management imposition. My answer remains that git’s power and flexibility (that derives from its model as a very flexible enhanced quasi-filesystem under the hood) are a strength that it worth knowing about and climbing that learning curve, but git’s command line interface is an atrocious mess of non-design.